Last month, the Oversight Board upheld Facebook’s suspension of former US President Donald Trump’s Facebook and Instagram accounts following his praise for people engaged in violence at the Capitol on January 6.

But in doing so, the board criticized the open-ended nature of the suspension, stating that “it was not appropriate for Facebook to impose the indeterminate and standardless penalty of indefinite suspension.”

The board instructed us to review the decision and respond in a way that is clear and proportionate, and made a number of recommendations on how to improve our policies and processes.

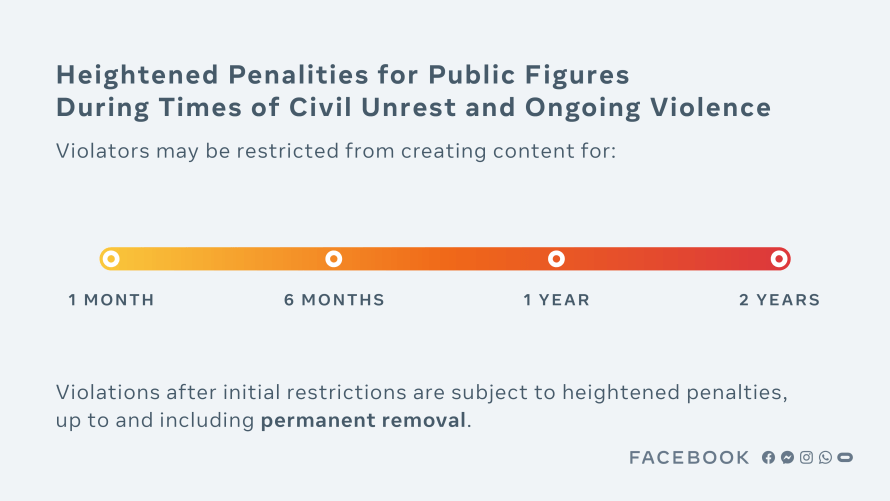

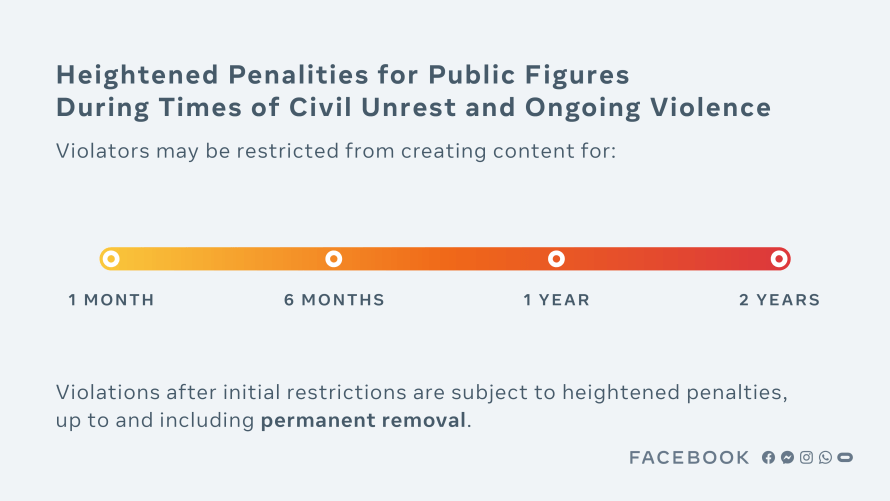

We are today announcing new enforcement protocols to be applied in exceptional cases such as this, and we are confirming the time-bound penalty consistent with those protocols which we are applying to Mr. Trump’s accounts.

Given the gravity of the circumstances that led to Mr. Trump’s suspension, we believe his actions constituted a severe violation of our rules which merit the highest penalty available under the new enforcement protocols.

We are suspending his accounts for two years, effective from the date of the initial suspension on January 7 this year.

At the end of this period, we will look to experts to assess whether the risk to public safety has receded. We will evaluate external factors, including instances of violence, restrictions on peaceful assembly and other markers of civil unrest.

If we determine that there is still a serious risk to public safety, we will extend the restriction for a set period of time and continue to re-evaluate until that risk has receded.

When the suspension is eventually lifted, there will be a strict set of rapidly escalating sanctions that will be triggered if Mr. Trump commits further violations in future, up to and including permanent removal of his pages and accounts.

In establishing the two year sanction for severe violations, we considered the need for it to be long enough to allow a safe period of time after the acts of incitement, to be significant enough to be a deterrent to Mr. Trump and others from committing such severe violations in future, and to be proportionate to the gravity of the violation itself.

We are grateful that the Oversight Board acknowledged that our original decision to suspend Mr. Trump was right and necessary, in the exceptional circumstances at the time.

But we absolutely accept that we did not have enforcement protocols in place adequate to respond to such unusual events. Now that we have them, we hope and expect they will only be applicable in the rarest circumstances.

We know that any penalty we apply — or choose not to apply — will be controversial. There are many people who believe it was not appropriate for a private company like Facebook to suspend an outgoing President from its platform, and many others who believe Mr. Trump should have immediately been banned for life.

We know today’s decision will be criticized by many people on opposing sides of the political divide — but our job is to make a decision in as proportionate, fair and transparent a way as possible, in keeping with the instruction given to us by the Oversight Board.

Of course, this penalty only applies to our services — Mr. Trump is and will remain free to express himself publicly via other means. Our approach reflects the way we try to balance the values of free expression and safety on our services, for all users, as enshrined in our Community Standards.

Other social media companies have taken different approaches — either banning Mr. Trump from their services permanently or confirming that he will be free to resume use of their services when conditions allow.

Accountability and Transparency

The Oversight Board’s decision is accountability in action. It is a significant check on Facebook’s power, and an authoritative way of publicly holding the company to account for its decisions. It was established as an independent body to make binding judgments on some of the most difficult content decisions Facebook makes, and to offer recommendations on how we can improve our policies.

As today’s announcements demonstrate, we take its recommendations seriously and they can have a significant impact on the composition and enforcement of Facebook’s policies.

Its response to this case confirms our view that Facebook shouldn’t be making so many decisions about content by ourselves. In the absence of frameworks agreed upon by democratically accountable lawmakers, the board’s model of independent and thoughtful deliberation is a strong one that ensures important decisions are made in as transparent and judicious a manner as possible.

The Oversight Board is not a replacement for regulation, and we continue to call for thoughtful regulation in this space.

We are also committing to being more transparent about the decisions we make and how they impact our users. As well as our updated enforcement protocols, we are also publishing our strike system, so that people know what actions our systems will take if they violate our policies.

And earlier this year, we launched a feature called ‘account status’, so people can see when content was removed, why, and what the penalty was.

In response to a recommendation by the Oversight Board, we are also providing more information in our Transparency Center about our newsworthiness allowance and how we apply it.

We allow certain content that is newsworthy or important to the public interest to remain on our platform — even if it might otherwise violate our Community Standards. We may also limit other enforcement consequences, such as demotions, when it is in the public interest to do so.

When making these determinations, however, we will remove content if the risk of harm outweighs the public interest.

We grant our newsworthiness allowance to a small number of posts on our platform. Moving forward, we will begin publishing the rare instances when we apply it.

Finally, when we assess content for newsworthiness, we will not treat content posted by politicians any differently from content posted by anyone else. Instead, we will simply apply our newsworthiness balancing test in the same way to all content, measuring whether the public interest value of the content outweighs the potential risk of harm by leaving it up.

Along with these changes, we have also taken substantial steps to respond to the other policy recommendations the board included in their decision.

Out of the board’s 19 recommendations, we are committed to fully implementing 15. We are implementing one recommendation in part, still assessing two recommendations, and taking no further action on one recommendation. Our full responses are available here.

DNT News